Less is More: Jumpstarting Productivity with Small Teams

Software Development

Issue: October, 1997

Adding a deck to the back of your house calls for different skills than adding a story to the top of your house. Building a 60-story office building requires a different approach altogether. A deck can be built by an amateur if he or she is sufficiently motivated, and so, occasionally, can an addition to a house. A skyscraper cannot, and should not, be built by an amateur, no matter how motivated that person may be.

For years, software engineering has concerned itself with skyscraper-sized projects: aircraft control systems, military defense programs, nationwide computer networks, complex operating systems, and the like. But with the growth of both Internet development and component-based development, software developers are beginning to see an increasing number of truly useful applications developed as “3 x 3” projects—three developers working for three months. Traditional software engineering has sometimes dismissed those “small” projects as not being worthy of serious attention. But such projects increasingly make up the bread and butter of many developers’ responsibilities, and they deserve some attention.

Some people who work alone or with only one or two other developers wouldn’t have it any other way. Small teams are more productive in many ways than large teams, but some best practices and experience from large teams can help small groups become even more productive. Before I explain how, I’ll describe how team size and project size affect team dynamics, team productivity, and the quality of the software developed.

Team Size, Communication, and Memory

Communication flows more easily on small teams than large teams. If you’re the only person on a project, communication is simple. The only communication path is between you and the customer. As the number of people on a project increases, however, so does the number of communication paths. It doesn’t increase additively, as the number of people increases, it increases multiplicatively, proportional to the square of the number of people.

Figure 1 illustrates how a two-person project has only one path of communication. A five-person project has 10 paths. A 10-person project has 45 paths, assuming that every person talks to every other person. The 2% of projects that have 50 or more programmers have at least 1,200 potential paths. The more communication paths you have, the more time you spend communicating, and the more opportunities for communication mistakes are created. Thus, larger-size projects demand organizational techniques that streamline communication or limit it in sensible ways.

Figure 1. Communication paths increase multiplicatively as team size increases linearly.

The typical approach taken to streamline communication on large projects is to formalize communication with written documents. Instead of 50 people talking to each other in every conceivable combination, 50 people read and write documents. Small projects can avoid documents that are created solely for the sake of streamlining communication.

There are some ways documentation can work to a small team’s benefit, however; documentation serves as an aid to developers’ fallible memories. People’s memories aren’t any better on small projects than they are on large projects, and it’s important to retain permanent records on any project that lasts longer than a few weeks. The small project size means that records can be less formal. Design on a small project might consist of a set of diagrams captured on flip charts rather than a formal architecture document. A project-wide e-mail archive can be an efficient substitute for a large project’s monolithic documents. Small projects can achieve some economies based on small team size, but they will always benefit from permanent supplements to human memory.

Effect of Size on Different Kinds of Software Development Activities

As project size and the need for formal communications increase, the kinds of activities that make up a software project change dramatically. Figure 2 shows the proportion of activities on projects of different sizes. The construction activities of detailed design, code and debug, and unit test are shown in gray. Requirements development isn’t shown because the time spent developing requirements isn’t necessarily related to the time spent implementing them.

Figure 2. As the size of the project increases, construction consumes a smaller percentage of the total development effort.

On very large projects, architecture, integration, system test, and construction each take up about the same amount of effort. On projects of medium size, construction starts to become the dominant activity. On a small project, construction is the most prominent activity by far, using as much as 80% of the time. In short, as project size decreases, construction becomes a greater part of the total effort.

Small projects tend to focus on construction, and I think that’s appropriate and healthy. That doesn’t mean they should completely ignore architecture, design, and project planning, however. Each plays an important role in the project’s outcome, and overlooking any one of them can lead to increased errors.

Effect of Size on Errors

You might not think project size would affect what kind of errors will be experienced, but as project size increases, a larger number of errors are usually attributed to mistakes in analysis and design. Figure 3 shows the general relationship.

Figure 3.

According to Capers Jones’s “Program Quality and Programmer Productivity” (IBM Technical Report TR 02.764, Jan. 1977), on small projects, construction errors make up about 75% of all errors found. Methodology has less predominance, and the biggest influence on program quality is the skill of the individual writing the program.

On typical larger projects, construction errors taper off to about 50% of total errors, while requirements and architecture errors make up the difference. Presumably, this is related to the fact that proportionately more time is spent in requirements development and architecture on large projects, so the opportunity for mistakes in those activities is proportionately larger.

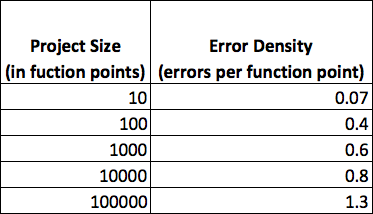

The defect density (number of defects per line of code or per function point) also changes with project size. You would naturally expect a project that’s twice as large as another to have twice as many errors, but the larger product is likely to have even more than that. Table 1 lists the range of defect densities you can expect on projects of various sizes.

Table 1. General relationship between project size and error density for delivered software in the United States.

Source: Adapted from Capers Jones, Applied Software Measurement, 2d Ed. (McGraw-Hill, 1997).

The data in Table 1 was derived from specific projects, so the numbers might bear little resemblance to the projects on which you’ve worked. As a snapshot of work in the software field, however, it’s illuminating. It indicates that the number of errors increases dramatically as project size increases, with very large projects having many times more errors per function point than small projects. Small projects are generally less complex, and developers on small projects can often work without all the quality assurance overhead needed on a large project.

Effect of Size on Productivity

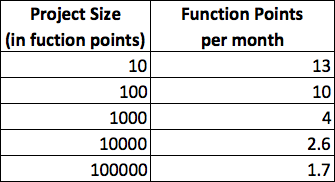

Productivity and software quality have a lot in common with respect to project size. Both are optimal on the smallest projects. How big does a project need to be before size begins to affect productivity? In “Prototyping Versus Specifying: A Multiproject Experiment” by B.W. Boehm, T.E. Gray, and T. Seewaldt (IEEE Transactions on Software Engineering, May 1984), the authors reported that smaller teams completed their projects with 39% higher productivity than larger teams. The size of the teams? Two people for the small projects, three for the large projects. Table 2 shows the general relationship between project size and productivity.

Table 2. General relationship between project size and productivity for delivered software in the United States.

Source: Adapted from Capers Jones, Applied Software Measurement, 2d Ed. (McGraw-Hill, 1997).

Productivity of any specific project is significantly influenced by its personnel, methodologies, product complexity, programming environment, tool support, and many other factors, so it’s likely that the data in Table 2 doesn’t apply directly to your current project. Take the specific numbers with a grain of salt.

Realize, however, that the general trend is significant. The productivity of the smallest projects is about 10 times the productivity of the largest ones. Even jumping from the middle of one range to the middle of the next—say from a program of 100 function points to a program of 1,000 function points–you could expect productivity to double. This table shows one of the reasons developers prefer to work on small projects—they can get more work done! After experiencing the productivity buzz of a high-performance, small-project team, it’s hard to readjust to the methodical approaches needed to ensure success on a larger project.

Programs, Products, and Systems

Lines of code and team size aren’t the only influences on project size. A more subtle influence is the quality and complexity of the final software. Suppose the original version of your Gigatron program took only one month to write and debug. It was a single program written, tested, and documented by a single person. If the first version of Gigatron took only one month, why did its next full release take six months?

As Fred Brooks pointed out in the Mythical Man-Month, Anniversary Edition (Addison-Wesley, 1995), the simplest kind of software is a single “program” that’s used by the person who developed it, or under controlled circumstances by a few other users. A program is typically used in-house.

A more sophisticated kind of software is a “product”—software that’s intended for use by people other than the original developer. This kind of software is typically intended for external users in environments that differ from the environment in which the software was created. It requires more extensive testing and documentation. The goal of program development is to try to be sure there is at least one right way to use the software. The goal of product development is to try to be sure that there is no wrong way to use the software. A software product costs about three times as much to develop as a software program.

In addition to the difference between programs and products, another kind of sophistication relates to the interactions among a group of programs that work together. Such a group is called a software “system.” Development of a system is more complicated than development of a program because of interfaces between programs and the care needed to integrate them. A software system costs about three times as much as a software program.

When a software “system product” is developed, it has the testing and documentation requirements of a product and the interaction complexity of a system. Software system products cost about nine times as much as programs with similar functionality. Organizations are sometimes surprised when they set out to develop a small program, and discover they really needed to develop a system product—at nine times the cost. Similarly, organizations used to developing system products can bury a small project in bureaucratic overhead if all they really need is a software program.

Applying Size Considerations to Small Projects

Fortunately for those of us who hate to see software engineering research go to waste, the methodologies developed for skyscraper-sized projects are a lot easier to scale down than the methodologies used in deck-sized projects are to scale up.

One method for determining the degree of formality required has been developed by the U.S. Department of Defense. You might think that military intelligence is a contradiction in terms, but the U.S. Department of Defense is the largest user of computers and computer software in the world. It is also one of the largest sponsors of programming research.

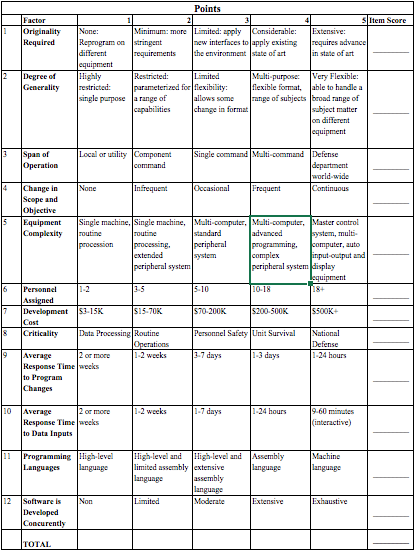

The Department of Defense’s approach involves scoring a programming project in each of 12 categories, using a formality worksheet to arrive at a score of between 12 and 60 points. Table 3 illustrates this worksheet.

Table 3. Project Formality Worksheet.

Source: Adapted from DOD-STD-7935 in Wicked Problems, Righteous Solutions (DeGrace and Stahl 1990).

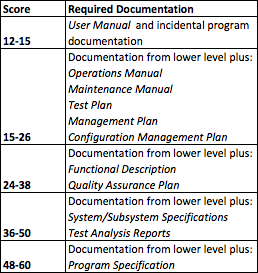

A score of 12 means the project’s demands are light, and little formality is needed. A score of 60 means that the project is extremely demanding and needs as much structure as possible. Table 4 lists the documentation recommended for projects of different difficulties. Depending on the needs of the specific project, a data requirements document, a database specification, and implementation procedures might also be recommended.

Table 4. Recommended documentation for projects of different difficulties.

Sources: DOD-STD-7935 in Wicked Problems, Righteous Solutions (Peter DeGrace and Leslie Stahl 1990), and Guidelines for Documentation of Computer Programs and Automated Data Systems (FIPS PUB 38).

This documentation isn’t created for its own sake. The point of writing a quality assurance plan isn’t to exercise your writing muscles; it is to force you to think carefully about quality assurance and to explain your plan to everyone else. The documentation is a tangible by-product of the real work that must be done to plan and construct a software system. If you feel like you’re going through the motions and writing generic documents, you’re not getting the potential benefits.

Useful Practices on Small Projects

Some practices are valuable no matter how small the project is. Aside from proper documentation, the following recommendations apply to any project.

Emphasize code readability. Whether your project is 3 x 3, 1 x 1, or 50 x 18, project members will spend a lot of time writing, reviewing, and revising the project’s source code. Maintenance programmers spend more than half of their time figuring out what source code does. You can make that job much easier by emphasizing good layout; careful variable, function, and class names; and meaningful comments.

Build a user interface prototype. User interface prototyping is useful on even the smallest projects because it helps avoid the serious error of designing, implementing, testing, and documenting software that users ultimately refuse to use. On small projects you can plan to evolve the prototype into the final software. On larger projects, you’re typically better off creating a throwaway prototype and then building the real software separately.

Hold technical reviews of designs and code. Technical reviews are useful on projects as small as one line of code. According to Daniel P. Freedman and Gerald M. Weinberg in Handbook of Walkthroughs, Inspections and Technical Reviews, Third Edition (Dorset House, 1990), in one software maintenance organization, 55% of one-line maintenance changes were in error before introducing code reviews. After introducing reviews, only 2% were in error.

Use automated source code control. Automated source code control creates virtually no overhead and pays for itself the first time you need to retrieve the version of the software you were working on yesterday. It is useful on even the smallest, one-person projects.

Use defect-tracking software. One of the most embarrassing software errors is to release software that contains an error that you knew about and simply forgot to fix. Like automated source code control, defect-tracking software adds virtually no overhead to a project and helps to prevent needless mistakes. Large projects typically need sophisticated, networked defect-tracking software; small projects can get by with a tool as simple as a defect-tracking spreadsheet.

Create and use checklists. Checklists are an often-overlooked, low-tech development tool, but they are useful in many areas. They are created from experience, so they’re inherently practical. Use them during requirements time to avoid missing key requirements. Use them at architecture and design time to be sure your design accounts for all relevant considerations. Use them during design and code reviews to help reviewers catch the most common problems. Use them at software release to assure that, in the last-minute rush to release the software, you don’t make careless mistakes.

The Most Important Lesson from Large Projects

Whether you’re building a deck or a skyscraper, it’s cheaper and easier to change your plans during the diagram phase than during construction. A deck project might seem small by comparison, but if you decide to move a support post after you’ve dug the post hole, mixed concrete for the footing, poured the footing, set the post into the concrete, and let the concrete cure, you’ll wish you had spent a few more minutes scrutinizing your design. (Believe me, I have the blisters to show for it.) And that’s only a weekend project.

If software engineering on large projects has taught us one thing, it’s the importance of being strategic about the progression from requirements development to design, implementation, and testing. The traditional waterfall life cycle model has earned a bad reputation for its dogmatic step-by-step progression from requirements development to system testing. Overly bureaucratic though it might be, the waterfall model embodies an important truth: defects are a lot cheaper to correct in the earlier phases of requirements development and architecture than they are in the later phases of construction and system testing. A one-sentence statement of requirements can give rise to a handful of design diagrams, which can flow into a few hundred lines of code, several dozen test cases, and many pages of user documentation. If you make an error at requirements time, it’s a whole lot cheaper and easier to correct that defect at requirements or architecture time than it is to overlook it until later. An erroneous requirement may not seem as rigid as a post set in concrete, but it will be every bit as hard to change after several hundred lines of code have been written to implement it.

Projects of different sizes benefit from different approaches. Practices that could be considered unconscionably sloppy on a large project might be overly rigorous for a small one. When the large-project training wheels come off, a small project team can cover a lot of ground. But an overly eager small project team can earn itself a nice set of scrapes and bruises if it doesn’t remember what those large-project training wheels were for. Small projects are exhilarating. They can be exhilarating and productive when the team remembers a few of the lessons that large project teams learned the hard way.

Further Reading

Wicked Problems, Righteous Solutions: A Catalog of Modern Software Engineering Paradigms, by Peter DeGrace and Leslie Stahl, Yourdon Press, 1990. This book catalogs the approaches to developing software. DeGrace and Stahl emphasize that any development approach must vary as project size varies. The section titled “Attenuating and Truncating” in Chapter 5 discusses customizing software development processes based on project size and formality. It includes descriptions of models from NASA and the Department of Defense and many interesting illustrations.

Software Engineering Economics, by Barry W. Boehm, Prentice-Hall Inc., 1981. This is an extensive treatment of the cost, productivity, and quality ramifications of project size and other variations in the software development process. It discusses of the effect of size on construction and other activities. Chapter 11 is an excellent explanation of software’s diseconomies of scale. Other project-size information is interspersed throughout the book.

Author: Steve McConnell, Construx Software | More articles